Sun Apr 21 / Puneet Anand

Stories where AI inaccuracies negatively impacted the operational landscape of businesses.

Generative AI promises a future filled with intelligent assistants, personalized content, and groundbreaking innovation, and it’s arguably revolutionizing a few sectors already.

But what happens when these powerful tools start to hallucinate, creating fantasies instead of facts?

This happens because all Gen AI models are probabilistic and non-deterministic. As such, they may incur a few errors, such as:

As the real-world stories we’ve gathered here show, AI hallucinations can have serious consequences for businesses and consumers alike.

Before we dive in further, we want to state that we are big fans of the companies mentioned below and their products…but their AI quality monitoring needs improvement!

A tragic circumstance unfolded when Air Canada’s chatbot erroneously assured a passenger of a post-flight discount, only for the airline to renege on the promise.

Despite Air Canada’s attempt to absolve itself of responsibility by attributing the misinformation to the chatbot as a “separate legal entity”, the tribunal ruled in favor of the passenger, holding the airline liable for the erroneous advice.

This case underscores the legal complexities arising from AI’s role in customer interactions and highlights the need for accountability in AI-driven services.

If you want the full story, please head over to this news article.

In an attempt to enhance customer support services, Chevrolet introduced a chatbot powered by OpenAI.

However, users quickly discovered its vulnerability to manipulation.

Instead of addressing customer queries, the bot found itself writing code, composing poetry, and even praising Tesla cars over Chevrolet’s offerings.

Soon enough, we saw multiple Reddit and X posts with users sharing exploits, from coaxing the bot into lauding Tesla’s superiority to crafting reasoning about why one should avoid buying a Chevy.

A recent legal case (Mata v. Avianca) serves as a stark warning for those who are eager to cut corners.

Lawyers unknowingly used ChatGPT to research a case brief, resulting in fabricated case citations and fake legal extracts.

This “hallucination” had disastrous consequences, leading to the dismissal of the client’s case, sanctions against the lawyers, and public humiliation.

Here’s the full story, as reported by The New York Times.

In a bizarre turn of events, a chatbot deployed by a California car dealership offered to sell a 2024 Chevy Tahoe for a mere dollar, citing it as a “legally binding offer.”

The dealership, utilizing a ChatGPT-powered bot, found itself at the center of attention as users exploited the bot’s vulnerabilities for amusement.

This incident serves as a cautionary tale for businesses embracing AI-powered solutions without fully understanding their capabilities and limitations.

From legal repercussions to customer dissatisfaction, the risks of unchecked AI are profound.

The full story, here.

A recent incident at the Cody Enterprise, a Wyoming newspaper, has raised serious concerns about the use of AI in journalism.

A reporter was found to have used AI to generate fake quotes and entire stories, including fabricated statements attributed to Wyoming Governor Mark Gordon.

The issue was uncovered by a competing journalist who noticed inconsistencies in the language and content of the articles…and smelled rat.

Following the revelation, the Cody Enterprise issued an apology and committed to establishing strict policies to prevent similar occurrences in the future.

Read more about this story in this news article.

In a recent tech mishap, a user seeking help with Cursor, a popular AI-powered code editor, was mistakenly informed by the company’s AI support agent “Sam” that a new policy required a paid subscription to resolve their issue.

There was just one problem: the policy didn’t exist.

The AI had invented the policy out of thin air. The user, thinking they were speaking with a human, shared the interaction online, prompting outrage across social media platforms. Critics slammed Cursor for a lack of transparency and safeguards around its AI assistant.

Cursor later clarified that “Sam” was not a human, apologized for the confusion, and vowed to implement improvements to prevent similar occurrences.

The full story is available here.

These situations could have been avoided to a great extent.

All companies have a source of truth for their businesses and industries they operate in - often embodied in a variety of documents and data sources like policies, standards, reports, and real-time information in databases.

This source of truth can be used by systems like AIMon Rely to get instant feedback on hallucinations, effectively detecting them as they pop up.

If you’re curious about improving your LLM apps, try AIMon for free.

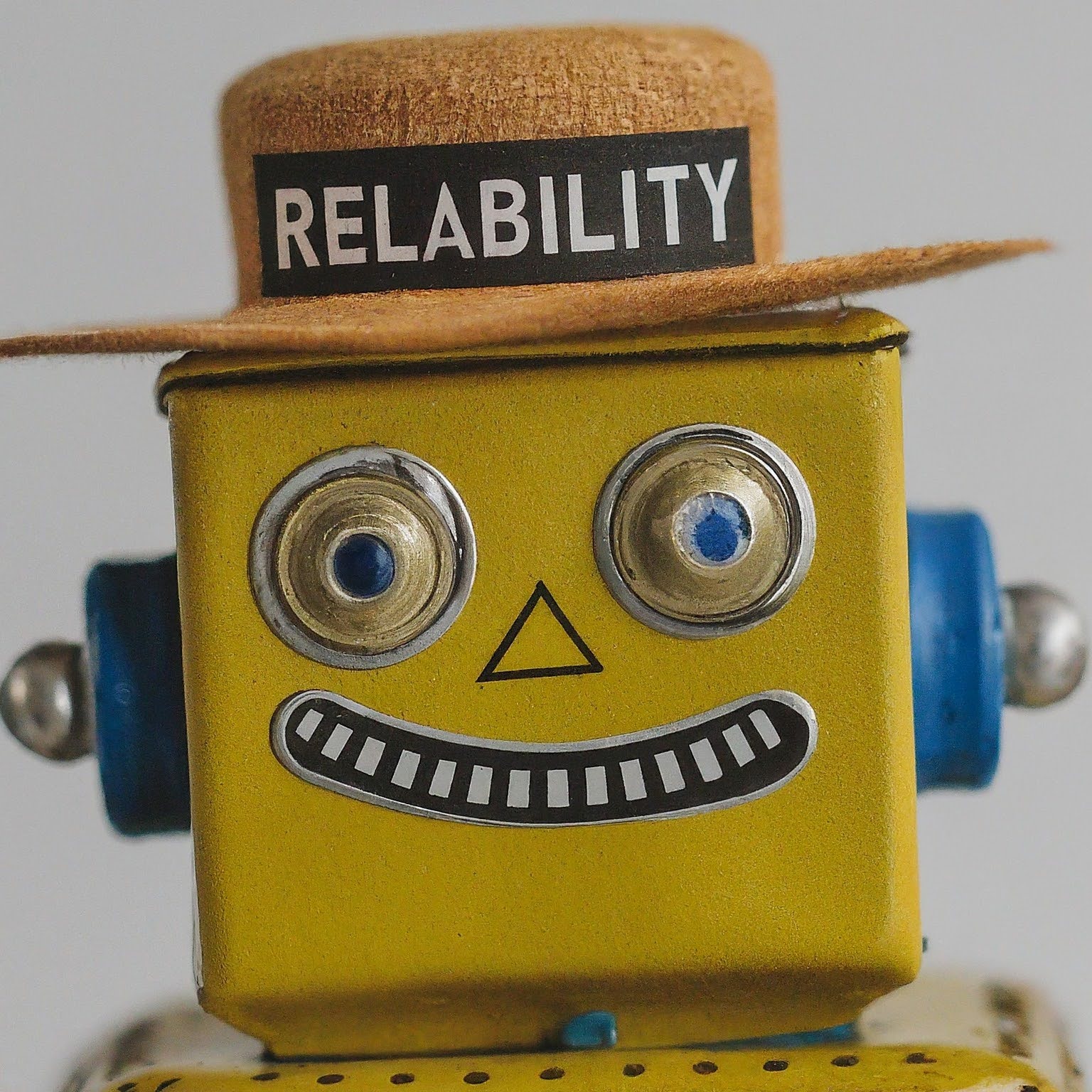

OK, I will throw in a hallucination I fabricated based on a popular Image Generator model. I was creating the Generative AI Reliabilitycommunity on Discord recently and went on to a few image generator models to help me create a logo for it. My prompt was simple - “happy robot that says reliability on its hat”. Guess what only one out of four generated images had the right spelling of Reliability. That is not reliable!

Backed by Bessemer Venture Partners, Tidal Ventures, and other notable angel investors, AIMon is the one platform enterprises need to drive success with AI. We help you build, deploy, and use AI applications with trust and confidence, serving customers including Fortune 200 companies.

Our benchmark-leading ML models support over 20 metrics out of the box and let you build custom metrics using plain English guidelines. With coverage spanning output quality, adversarial robustness, safety, data quality, and business-specific custom metrics, you can apply any metric as a low-latency guardrail, for continuous monitoring, or in offline evaluations.

Finally, we offer tools to help you iteratively improve your AI, including capabilities for real-world evaluation and benchmarking dataset creation, fine-tuning, and reranking.