Tue Sep 10 / Puneet Anand

Join us as we examine the key layers of an LLM tech stack and help identify the best tools for your needs.

With a wide variety of AI tools and services at every layer of the tech stack, choosing the right components for LLM applications requires carefully assessing goals, constraints, and requirements.

This guide will walk you through the key layers of an LLM tech stack and provide insights into selecting the best tools for your needs.

If you’re wondering what is an LLM tech stack, let’s make sure we’re all on the same page from the get-go:

An LLM tech stack refers to the combination of technologies, tools, and frameworks used to build, deploy, and scale large language model applications. The exact stack may vary depending on specific use cases, but the components are generally common across most LLM applications.

Before diving into specific tools and platforms, it’s essential to consider the key factors that will influence your decision:

Use case: Are you building a chatbot, an AI-powered document search tool, or a code generation system? Different use cases may require other tools, so define yours first and foremost.

Data availability: Do you have access to large datasets, or will you need to rely on external data sources, or create synthetic datasets? Is the data structured or unstructured? Checking your available “data profile” now will save you time in the future.

Scalability: Will your application need to handle large-scale deployments and real-time interactions or work in offline batch mode instead?

Latency and performance: Related to scalability - how critical are low latency and high performance in your use case? (In Beckham’s voice: “Be honest”).

Budget: Proprietary tools and cloud providers often have associated costs, while open-source solutions may offer more flexibility at a lower price (be ready for some manual labor though).

With these factors in mind, let’s dive into the layers of the LLM tech stack and explore the components of each layer.

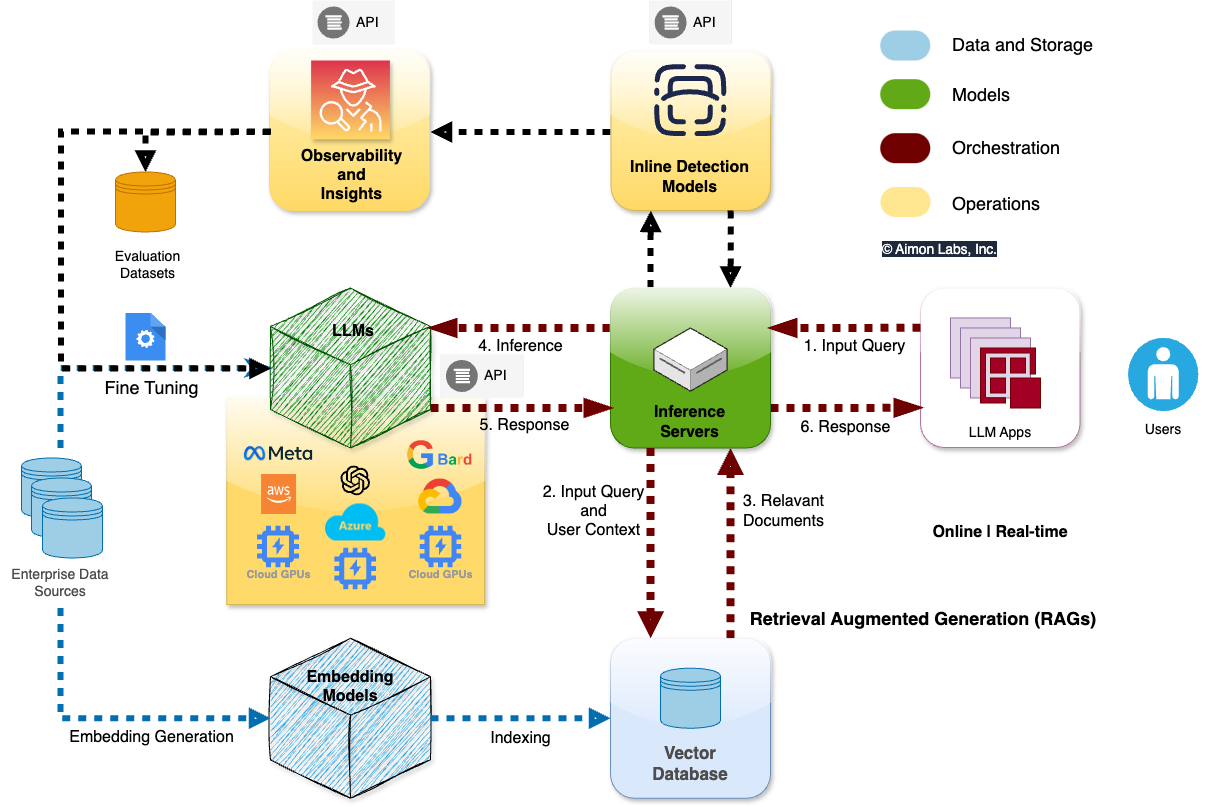

The LLM tech stack can be divided into several layers, each serving a purpose in building, deploying, and maintaining AI-driven apps, and include the:

Now let’s take a look at each of these to get a better understanding of them.

The data layer is foundational to any LLM-powered application, as it manages the data required for model training and relevant context enrichment at query time.

A well-designed data layer ensures that the model has access to high-quality, relevant data in a format it can process efficiently.

Data pipelines: Tools like Apache Airflow and KubeFlow enable the flow of data from raw sources to the model. They manage data ingestion, preprocessing, and transformation.

Embedding models: Embedding models like OpenAI’s Text Embedding Models or Sentence Transformers convert raw text into vector representations, which in turn are processed by LLM Apps for tasks like similarity search and information retrieval.

Vector databases: Solutions like Milvus, Pinecone, and Weaviate store and retrieve vector data. These databases are essential for implementing efficient similarity searches, enabling the LLM apps to pull relevant information dynamically when serving the query.

The model layer is at the heart of your LLM tech stack - it’s where you choose the pre-trained models that best align with your use case, whether you’re leveraging proprietary models or open-source alternatives.

Proprietary LLMs and APIs: If you require access to cutting-edge language models, you can rely on proprietary APIs such as OpenAI’s GPT-4 or Anthropic’s Claude. These models offer advanced capabilities but come with higher costs.

Open source LLM APIs: When budget or customization becomes a concern, open-source models like Llama 3.1 provide flexibility to deploy and fine-tune models on your infrastructure.

RAG (Retrieval-augmented Generation): Integrating RAG allows you to enhance model performance by fetching relevant external data during inference. This is crucial for use cases where the model’s base knowledge needs to be supplemented with real-time information. Typically, the vector database (see the section above) setup is used for this purpose, but based on the use case, we’ve seen companies utilize existing data stores like RDBMS too.

Do you require a proprietary model with a large token limit, like GPT-4 Turbo (128,000 tokens), or will an open-source model like Llama 2 (4,096 tokens) suffice?

What tasks do you want your LLM app to serve? Different models are trained for different purposes. For example, while the GPT 4o model is optimized for complex tasks, the GPT Base model is optimized for natural language - but not instruction following.

Will your application need fine-tuning, or can you rely on in-context learning for dynamic tasks or both?

The orchestration layer manages the flow of data and responses between the LLM, external systems, and end users.

This layer ensures smooth interactions, prompt optimization, and response management.

Key components of the orchestration layer:

Orchestration frameworks: Platforms like LangChain and LlamaIndex are popular choices for orchestrating LLM workflows, allowing you to manage multi-step interactions, handle dynamic prompt creation, and integrate external knowledge sources.

APIs / Plugins: Custom APIs or plugins from OpenAI, Zapier, Make, or other services provide integrations between LLMs and other apps, allowing you to extend the model’s capabilities.

LLM caches: Tools like Redis or Memcached can store frequently-used responses, reducing latency in high-throughput systems and improving efficiency.

The operations layer handles the deployment, scaling, and monitoring of LLM-powered applications in production environments.

This layer is crucial for maintaining reliability and performance as the system scales to handle larger workloads.

Logging, monitoring, and evals: Traditional observability tools like Datadog extend into logging and monitoring for LLMs, ensuring that you can track the raw performance of your LLM system, while tools like AIMon enable you to strike all three birds with a single tool:

Cloud providers: Leading cloud providers like AWS, Google Cloud, and Microsoft Azure offer compute resources optimized for LLM workloads, including dedicated hardware like GPUs and TPUs.

Specialized AI clouds: For teams seeking an optimized cloud environment for LLMs, specialized providers like Hugging Face offer managed environments tailored for LLM deployment, often bundled with model repositories, evaluation tools, and hosting services.

Selecting the tools and services for your LLM tech stack depends on specific needs, resources, and the complexity of the use case.

Here’s a simplified 4-step approach to help you decide:

Evaluate your data needs: Start with your data layer. Consider whether you need advanced vectorized search capabilities (e.g., Pinecone) or robust data pipelines (e.g., Airflow).

Select the model: Choose between proprietary APIs (e.g., OpenAI GPT-4) or open-source models (e.g., Llama 3) based on your budget, desired performance, and fine-tuning needs.

Orchestrate efficiently: Use LlamaIndex to orchestrate interactions between your LLM and external systems, especially if you’re integrating with APIs or databases. Build key integrations first, and document potential iterations for when you need them.

Deploy and monitor: Make sure your operations layer is scalable and observable, using cloud platforms like AWS or specialized clouds like Hugging Face, alongside observability tools like AIMon.

Whether you’re building a simple chatbot or a complex AI-driven application, understanding the role of each layer and picking the right tools is key to delivering a scalable, reliable, and efficient solution.

As the ecosystem evolves, new tools and best practices will emerge, but that’s not a problem to worry about today - now, it’s time to build, scale, and improve your apps!

Backed by Bessemer Venture Partners, Tidal Ventures, and other notable angel investors, AIMon is the one platform enterprises need to drive success with AI. We help you build, deploy, and use AI applications with trust and confidence, serving customers including Fortune 200 companies.

Our benchmark-leading ML models support over 20 metrics out of the box and let you build custom metrics using plain English guidelines. With coverage spanning output quality, adversarial robustness, safety, data quality, and business-specific custom metrics, you can apply any metric as a low-latency guardrail, for continuous monitoring, or in offline evaluations.

Finally, we offer tools to help you iteratively improve your AI, including capabilities for real-world evaluation and benchmarking dataset creation, fine-tuning, and reranking.